Touc h Accessibility Proposal & Discussion

Note: this is an internal rough proposal to the mobile task force, not for re-use outside of the Mobile TF

This is the supplement to David MacDonald's proposed discussion and reorganization of Mobile Accessibility: How WCAG 2.0 and Other W3C/WAI Guidelines Apply to Mobile The purpose of this is reorganization is to:

- List possible WCAG Guidelines, Success Criteria, and Techniques, and capture important discussions about them.

- Document which advice cannot become Success Criteria or suffient techniques in the current wording

- Begin discussion about whether we can adapt this non SC advisory recommendations into Success Criteria format, or leave them as advisory (or best practices), or Sufficient Techniques for an existing Success Criteria. We understand that almost no one follows advisory or best practice advice.

- Turn the information into a form digestible as an Normative Extension Spec. for WCAG 2

- After the possible Guidelines, Success Criteria and Techniques, the entire Editor's Draft Sept 3rd is inserted which could become the understanding document for mobile, or taken appart as understanding for each of the Success Criteria, Guidelines and Technques.

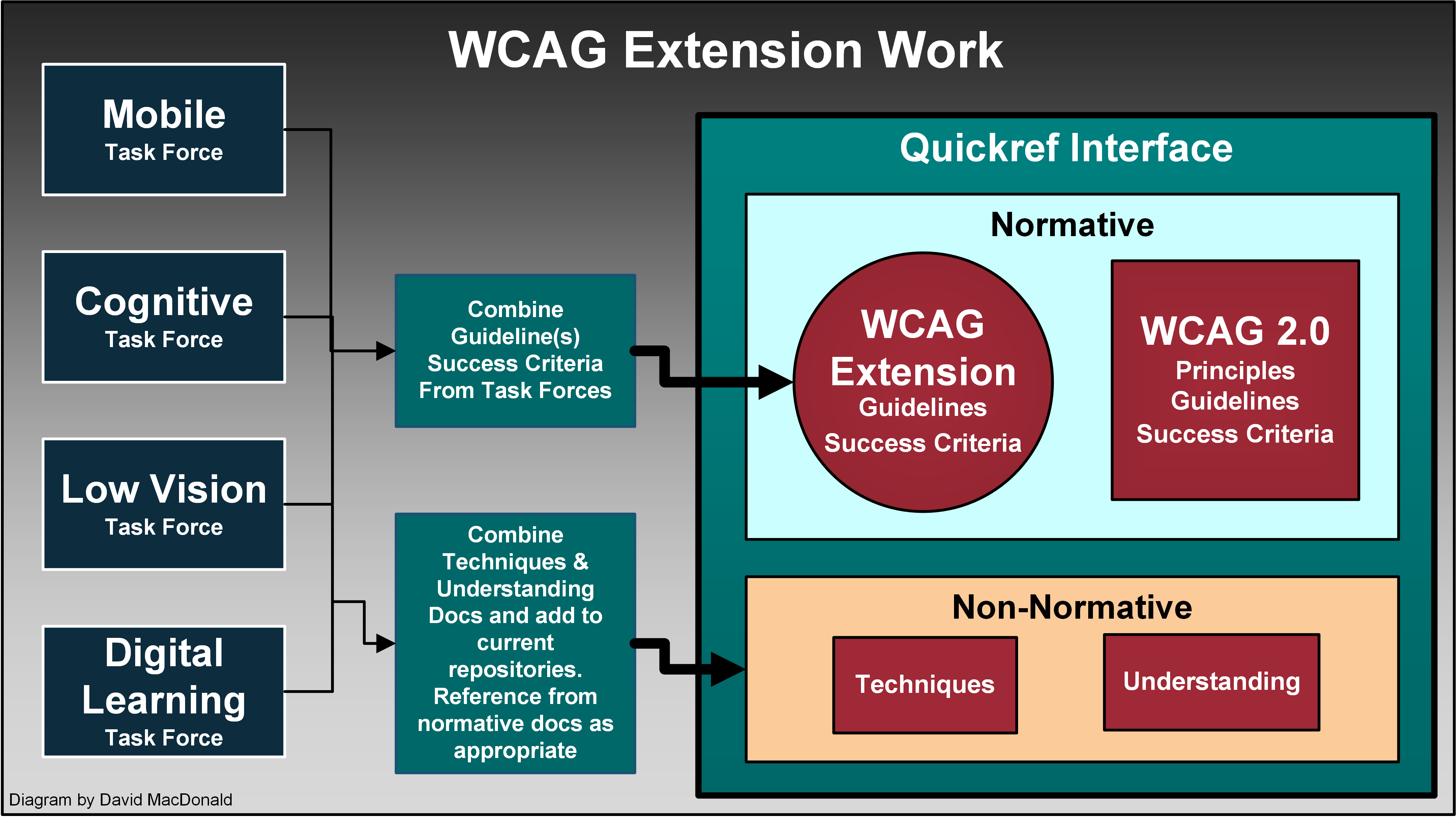

Diagram of how WCAG extensions could relate to the current WCAG 2 Integration. The task forces, Mobile, Cognitive, and Low Vision would develop guidelines, success criteria and techniques separately, and then integrate them to resolve conflicts and overlaps and then integrate them into the singular WCAg extension. The techniques would be collected in a non normative document as techniques for the extension, and some of them can be referenced from WCAG 2 where the techniques might apply to an existing Success criteria.

New Guideline accepted by consensus of the Mobile Task Force at the meeting Sept. 10, 2015

Note: All proposed new guidelines and Success Criteria are numbered as to where they are proposed in WCAG 2 (that's why their numbers don't have 3.x as per this section)

Expand to view comments

The above guideline takes into account the discussion below and attempts to address it with an overarching Guideline where detailed Success Criteria can go.

Patrick Lauke: "touch" is not strictly a "mobile" issue. There are already many devices (2-in-1 tablet/laptops, desktop machines with external touch-capable monitors, etc) beyond the mobile space which include touch interaction. So, a fundamental question for me would be: would these extensions be signposted/labelled as being "mobile-specific", or will they be added to WCAG 2 core in a more general, device-agnostic manner? Further, though I welcome the addition of SCs relating to touch target size and clearance, I'm wondering why we would not also have the equivalent for mouse or stylus interfaces...again, in short, why make it touch-specific, when in general the SCs should apply to all "pointers" ("mouse cursor, pen, touch (including multi-touch), or other pointing input device", to borrow some wording from the Pointer Events spec http://www.w3.org/TR/pointerevents/)?

Detlev: Hi Patrick, I didn't intend this first draft to be restricted to

touch ony devices - just capturing that input mode. It's certainly

good to capture input commonalities where they exist (e.g., activate

elements on touchend/mouseup)

Patrick: Or, even better, just relying on the high-level focus/blur/click ones (though even for focus/blur, most touch AT don't fire them when you'd expect them - see http://patrickhlauke.github.io/touch/tests/results/#mobile-tablet-touchscreen-assistive-technology-events and particularly http://patrickhlauke.github.io/touch/tests/results/#desktop-touchscreen-assistive-technology-events where none of the tested touchscreen AT trigger a focus when moving to a control)

Jonathan Avila: Regarding touch start and end -- we are thinking of access without AT by people with motor impairments who may tap the wrong control before sliding to locate the correct control. This is new and different than sc 3.2.x. I understand and have seen what you say about focus events and no key events so that is a separate matter to address

Detlev Fischer: - but then there are touch-specific

things, not just touch target size as mentioned by Alan, but also

touch gestures without mouse equivalent. Swiping - split-tapping -

long presses - rotate gestures - cursed L-shaped gestures, etc.

Patrick: It's probably worth being careful about distinguishing between gestures that the *system / AT* provides, and which are then translated into high-level events (e.g. swiping left/right which a mobile AT will interpret itself and move the focus accordingly) and gestures that are directly handled via JavaScript (with touch and pointer events specific code) - also keeping in mind that the latter can't be done by default when using a touchscreen AT unless the user explicitly triggers some form of gesture passthrough.

Detlev: That's a good point. Thinking of the perspective of an AT user carrying out an accessibility test, or even any non-programmer carrying out a heuristic accessibility evaluation using browser toolbars and things like Firebug, I wonder what is implied in making that distinction, and how it might be reflected in documented test procedures.

Are we getting to the point where it becomes impossible to carry out accessibility tests without investigating in detail the chain of events fired?

Patrick: For the former, the fact that the focus is moved sequentially using a swipe left/right rather than a TAB/SHIFT+TAB does not cause any new issues not covered, IMHO, by the existing keyboard-specific SCs if instead of keyboard it talked in more input agnostic terms. Same for not trapping focus etc.

Detlev: One important difference being that swiping on mobile also gets to non-focusable elements. While a script may keep keyboard focus safely inside a pop-up window, a SR user may swipe beyond that pop-up unawares (unless the page background has been given the aria-hidden treatment, and that may not work everywhere as intended). Also, it may be easier to reset focus on a touch interface (e.g. 4-finger tap on iOS) compared to getting out of a keyboard trap if a keyboard is all you can use to interact.

Patrick: For the latter, though, I agree that this would be touch (not mobile though) specific...and advice should be given that custom gestures may be difficult/impossible to even trigger for certain users (even for single touch gestures, and even more so for multitouch ones).

Detlev: Assuming a non-expert perspective (say, product manager, company stategist), when looking at Principle 2 Operable it would be quite intelligible to talk about

2.1 Keyboard Accessible

2.5 Touch Accessible

2.6 Pointer Accessible (It's not just Windows and Android with KB, Blackberry has a pointer too)

2.7 Voice Accessible

While the input modes touch and pointer share many aspects and (as you show) touch events are actually mapped onto mouse events, there might be enough differences to warrant different Guidelines.

For example, you are right that there is no reason why target size and clearance should not also be defined for pointer input, but the actual values would probably be slightly lower in a "Pointer accessible" Guideline. A pointer is a) more pointed (sigh) and therefore more precise and b) does not obliterate its target in the same way as a finger tip.

Another example: A SC for touch might address multi-touch gestures, mouse has no swipe gesture. SCs under Touch accessible may also cover two input modes: default (direct interaction) and the two-phase indirect interaction of focusing, then activating, when the screenreader is turned on.

Of course it might be more elegant to just make Guideline 2.1 input mode agnostic, but I wonder whether the resulting abstraction would be intelligible to designers and testers. I think it would be worthwhile to take a stab at *just drafting* an input-agnostic Guideline 2.1 "Operable in any mode" and draft SC below, to get a feel what tweaking core WCAG might look like, and how Success criteria and techniques down the line may play out. Interfaces catering for both mouse and touch input often lead to horrible, abject usability. Watch low vision touch users swear at Windows 8 (metro) built-in magnification via indirect input on sidebars (an abomination probably introduced because mice don't know how to pinch-zoom). Watch Narrator users struggle when swipe gestures get too close to the edge and unintendedly reveal the charms bar or those bottom and top slide-in bars in apps. Similar things happen when Blackberry screenreader users unintentionally trigger the common swipes from the edges which BB thought should be retained even with screenreader on. And finally, watch mouse users despair as they cannot locate a close button in a metro view because it is only revealed when they move the mouse right to the top edge of the screen.

Mike Pluke: I’d personally prefer something like “character input interface” [instead of keyboard interface] to further break the automatic assumption that we are talking about keyboards or other things with keys on them.

Gregg Vanderheiden: This note is great

- Note 1: A keyboard interface allows users to provide keystroke input to programs even if the native technology does not contain a keyboard.

I would add a note 2

- Note 2: full control form a keyboard interface allows control from any input modality since it is modality agnostic. It can allow control from speech, Morse code, sip and puff, eye gaze, gestures, and augmentative communication aid or any other device or software program that can take (any type) of user input and convert it into keystrokes. Full control from keyboard interface is to input what text is to output. Text can be presented in any sensory modality. Keyboard interface input can be produced by software using any input modality.

RE “character input interface”

- we thought of that but you need more than the characters on the keyboard. You also need arrow keys and return and escape etc.

- we thought of encoded input (but that is greek) and ascii (but that is not international) or UNICODE (but that is undefined and really geeky)

Jonathan: While I agree the term [Keyboard Interface] is misleading, In desktop terms testing with a physical keyboard is one good way to make sure the keyboard interface is working. Even on mobile devices, support a physical keyboard through the keyboard interface is something that helps people with disabilities and is an important test. It just doesn’t go far enough.

David MacDonald: +1 to "it doesn't go far enough."

Patrick: Sure, but this SC would be relegated into the "touch/mobile" extension to WCAG, which somebody designing a desktop/mouse site may look into (again, going back to the fundamental problem of WCAG extension, but I digress).

David: WCAG 2 is a stable document, entrenched in many jurisdictional laws, which is a good thing. So far, unless something drastically changes in consensus or in the charter approval, the extension model is what we are looking at. However, we may want to explore the idea of incorporating all these recommendations into failure techniques or sufficient techniques for *existing* Success Criteria in WCAG core, which would ensure they get first class treatment in WCAG proper. This would ensure that they are not left out of jurisdictions that didn't add the extension. But some of the placement in existing Success Criteria could be pretty contrived. Most would probably end up in 1.3.1 (like everything else).

Expand to view comments

Gregg: What good is one dimension?? If you have any physical disability you need to specify both dimensions. ALSO – for what size screen. An apple watch? An iphone 4? All buttons would have to be huge in order to comply on very small screens – and you don’t know what size screen – so you can’t use absolute measures unless you assume smallest screen.

Patrick: - Gregg's question about 9mm - it would be good to clarify if we mean *physical* mm, or CSS mm. Note that many guidelines (such as Google's guidelines for Android, or Microsoft's app design guidelines) use measurements such as dips (device independents pixels) precisely to avoid having to deal with differences in actual physical device dimensions (as it's the device/OS' responsibility to map its actual physical size to a reasonable dips measure, so authors can take that as a given that is reasonably uniform across devices). - on a more general level, I questioned why there should be an SC relating to target size for *touch*, but that there's no equivalent SC for mouse or stylus interaction?

Jon: My guess is that touch target size would need to be larger than a mouse pointer touch area -- so the touch target would catch those as well.

Patrick: Too small a target size can be just as problematic for users with tremors, mobility impairments, reduced dexterity, etc.

Jon That's exactly who this SC is aimed at. This SC is not specifically aimed at screen reader users or low vision users but people with motor impairments.

Patrick: I know it's not the remit of the TF, but I'd argue that this is exactly the sort of thing that would benefit from being a generalised SC applicable to all manner of pointing interaction (mouse, pen, touch, etc). Or is the expectation that there will be a separate TF for "pen and stylus TF", "mouse interaction TF", etc? (these two points also apply to 2.5.5)

Expand to view comments

Patrick: I like the concept, but the wording that follows (requiring that things only trigger if touch point is still wihin the same element) is overly specific/limiting in my view. Also, it is partly out of the developer's control. For instance, in current iOS and Android, touch events have a magic "auto-capture" behavior: you can start a touch sequence on an element, move your touch point outside of the element, and release it...it will still fire touchmove/touchend events (but not click, granted). Pointer Events include an explicit feature to capture pointers and to emulate the same behavior as touch events. However, it would be possible to make taps/long presses revocable by, for instance, prompting the user with a confirmation dialog as a result of a tap/press (if the action is significant/destructive in particular). This would still fulfill the "revocable" requirement, just in a different way to "must be lifted inside the element". In short: I'd keep the principle of "revocable" actions, but would not pin down the "the finger (touch point, whatever...keeping it a bit more agnostic) is lifted inside the element".

Gregg: This will make interfaces unusable by some people who cannot reliably land and release within the same element. Also it is only a relatively small number that know about this. Also if someone hits something by mistake – they usually don’t have the motor control to use this approach. Better is the ability to reverse or undo. I think that is already in WCAG though – with caveats.

Expand to view comments

Gregg: You have no control of how it is changed – so how can you be expected to have anything still work?

David MacDonald: How about this:

2.5.4 Modified Touch (2nd draft): For pages and applications that support touch, all functionality of the content is operable through touch gestures with and without system assistive technology activated. (Level A)Expand to view comments

David: In the understanding document for this SC we would explain that touch gestures with VO on could be and probably would be the VO equivalent to the standard gestures used with VO off.

Patrick: As it's not possible to recognise gestures when VoiceOver is enabled, as VO intercepts gestures for its own purposes (similar to how desktop AT intercept key presses) unless the user explicitly uses a pass-through gesture, does this imply that interfaces need to be made to also work just with an activation/double-tap ? i.e., does double-tap count in this context as a "gesture"? If not, it's not technically possible for web pages to force pass-through (no equivalent to role="application" for desktop/keyboard handling).

David: VO uses gestures for its own purposes and then adds gestures to substitute for those it replaced i.e., VO 3 finger swipe= 1 finger swipe. I'm suggesting that we require everything that can be accomplished with VO off with gestures can be accomplished with VO on.

Patrick: Not completely, though. If I build my own gesture recognition from basic principles (tracking the various touchstart/touchmove/touchend events), the only way that gesture can be passed on to the JS when VO is activated is if the user performs a pass-through gesture, followed by the actual gesture I'm detecting via JS. Technically, this means that yes, even VO users can make any arbitrary gesture detected via JS, but in practice, it's - in my mind - more akin to mouse-keys (in that yes, a keyboard user can nominally use any mouse-specific interface by using mouse keys on their keyboard, just as a touch-AT user can perform any custom gesture...but it's more of a last resort, rather than standard operation). Also, not sure if Android/TalkBack, Windows Mobile/Narrator have these sorts of pass-through gestures (even for iOS/VO, it's badly documented...no mention of it that I could find on any official Apple sites). In short, to me this still makes it lean more towards providing all functionality in other, more traditional ways (which would then also work for mobile/tablet users with an external keyboard/keyboard-like interface). Gestures can be like shortcuts for touch users, but should not replace more traditional buttons/widgets, IMHO. This may be a user setting perhaps? Choose if the interface should just rely on touch gestures, or provide additional focusable/actionable controls?

Jonathan: I also worry that people might try to say that pass through gestures would meet this requirement.

David: How could we fix this concern? I think WCAG 2.1.1 already covers the need for keyboard use (without mouseKeys). We could maybe plug the hole so the pass through gesture is not relied on by the author the same way we do in 2.1.1 not relying on MouseKeys..

Patrick: does this imply that interfaces need to be made to also work just with an activation/double-tap ? i.e., does double-tap count in this context as a "gesture"?

Jonathan: In theory I think this would benefit people from prosthetics too. For example, many apps support zoom by double tapping without requiring a pinch. You should be able to control all actions from touch (e.g. through an API) and also through the keyboard. But I think it would be too constrictive to require on tap, double tap, long tap, etc. Since screen readers and the API support actions through rotors and other gestures it would seem that API based and keyboard access would be sufficient. But you bring up a good point that while this might make sense on native -- but mobile web apps don't have a good way without Indie UI to expose actions to the native assistive technologies. This is a key area that needs to be addressed by other groups and perhaps may be addressed by other options such as WAPA -- but we do need to be careful and perform some research as the abilities we need may not be yet supported or part of a mature enough specification.

David: It would be great to operate everything through taps... even creating a Morse code type of thing, where all gestures could be done with taps for those who can't swipe, but it would require a lot more functionality than is currently available. I think we should park it, and perhaps provide it as a best practise technique under this Success Criteria.

Gregg: do they have a way to map screen readers gestures [to avoid] colliding special gestures in apps? this was not to replace use of gestures — but to provide a simple alternate way to get at them if you can’t make them (physically can’t or can’t because of collisions)

Patrick: Not to my knowledge. iOS does have some form of gesture recording with Assistive Touch, but I can't seem to get it to play ball in combination with VoiceOver, and in the specific case of web content (though this may be my inexperience with this feature). On Android/Win Mobile side, I don't think there's anything comparable, so certainly no cross-platform, cross-AT mechanism.

Jonathan: This is only one aspect of the situation. It’s not so much as colliding gestures rather than a collision of how the touch interface is reconfigured to trap gestures combined with the issue of not being able to see where the gesture is being drawn. For iOS native apps, there is:

- an actions API that allows apps to associate custom actions with an actions rotor or assign a default action to a magic tap gesture

- a pass through gesture –tap and hold and then perform the gestures.

- A trait that can be assigned that will allow direct UI interaction with the element – allowing screen reader users the ability to sign there name, etc.

Take for example a hypothetical knob on a webpage. Without a screen reader I can turn that knob to specific settings. As a developer I can implement keystrokes, let’s say control+1, control+2, etc. for the different settings. I have met the letter of the success criteria by providing a keyboard interface through creating JavaScript shortcut keystroke listeners. In practical reality though as a mobile screen

reader user who does not carry around a keyboard I have no way to trigger those keystrokes.

Patrick: Actually, it gets worse than that. As I noted previously, not all mobile/tablet devices with a paired keyboard actually send keyboard (keydown, keypress) events all the time. In iOS, with a paired keyboard (but no VO enabled), the keyboard is completely inactive except when the user is in a text entry field or similar (basically, it only works in the same situations in which iOS' on-screen keyboard would be triggered). When VO is enabled, the keyboard still only sends keyboard events when in a text entry field etc. In all other situations, every keystroke is intercepted by VO (and again, there is no mechanism to override this with role="application" or similar). In short, for iOS you can't rely on anything that listens for keydown/keypress either. In Android, the situations is more similar to what would happen on desktop (from what I recall at least...would need to do some further testing) in that the keyboard always works/fires key events. Not had a chance to test Windows Mobile with paired keyboard yet, but I suspect it works in a similar way.

David: We never envisioned in the years 2000-2008 when we were tying up WCAG people who are blind using a flat screen to operate a mobile device. I think it was a huge leap forward for our industry, and we need to foster their relationship to their devices, and run with it. Keyboard requirements are in place, they are not going away. Our job now is to look at the gaps, and see if there is anything we can do to ensure these users can continue to use their flat screens which has levelled the playing field for the blind, and to foster authoring that doesn't screw that up.

Here's a rewrite with addressing the concerns.

2.5.4 Modified Touch (3rd draft): For pages and applications that support touch, all functionality of the content is operable through touch gestures with and without system assistive technology activated, without relying on pass through gestures on the system (Level A)Expand to view comments

Patrick: As said, when touch AT is running, all gestures are intercepted by the AT at the moment (unless you mean taps?). And only iOS, to my knowledge, has a passthrough gesture (which is not announced/exposed to users, so a user would have to guess that if they tried it, something would then happen).

If the intention was to also mean "taps", this is lost on me and possibly the majority of devs, as "gesture" usually implies a swipe, pinch, rotation, etc, which are all intercepted. [ED: skimming towards the end of the document, I see that in 3.3 Touchscreen Gestures "taps" are listed here. This, to me - and I'd argue most other devs - is confusing...I don't normally think of a "tap" as a "gesture"] So this SC (at least the "touch gestures with ... assistive technology activated") part is currently technically *impossible* to satisfy (for anything other than taps), except by not using gestures or by providing alternatives to gestures like actionable buttons.

This can be clarified in the prose for the SC, but perhaps a better way would be to drop the "gestures" word, and then the follow-up about passthrough, leaving a much simpler/clearer:

"2.5.4 Touch: For pages and applications that support touch, all functionality of the content is operable through touch with and without system assistive technology activated (Level A)"

I'm even wondering about the "For pages and applications that support touch" preamble...why have it here? Every other SC relating to touch should then also have it, for consistency? Or perhaps just drop that bit too?

"2.5.4 Touch: All functionality of the content is operable through touch with and without system assistive technology activated (Level A)"

OR is the original intent of this SC to be in fact

"2.5.4 Touch: For pages and applications that support touch *GESTURES*, all functionality of the content is operable through touch gestures with and without system assistive technology activated, without relying on pass through gestures on the system (Level A)"

is this about gestures? In that case, it's definitely technically impossible to satisfy this SC at all currently (see above), so I'd be strongly opposed to it.

Detlev: Maybe it's better to separate the discussion of terminology from the discussion of reworking the mobile TF Doc.

I personally don't get why someone would choose to call swiping or pinching a gesture, but refuse to apply this term to tapping. What about double and triple taps? Taps with two fingers? Long presses? Split taps? To me, it makes sense to call *all* finger actions applied to a touch screen a gesture. I simply don't get why tapping would not count. Where do you draw the line, and why? A related issue is the distinction between touch gestures and button presses. With virtual (non-tactile, but fixed position capacitive) buttons, you already get into a grey area. The drafted Guideline 2.5 "Touch Accessible: All functionality available via touch" probably needs to be expanded to include account for devices with physical (both tactile or capacitive) device buttons. Which would mean something likeGuideline 2.5 OR SC 2.5.4 (4th draft): On devices that support touch input, all functions are available via touch or button presses also after AT is turned on (i.e. without the use of external keyboards).Expand to view comments

Detlev: Not well put, but you get the idea.

David: I think when we say Touch, we mean all touch activities such as swipes, taps, gestures etc... anything you do to operate the page by touching it. Regarding gestures, all gestures are intercepted by VoiceOver. But all standard gestures are replaced by VoiceOver, unless the author does something dumb to break that. I think we need to, at a minimum, ensure that standard replacement gestures are not messed up. For instance: I recently tested a high profile app for a major sports event. It had a continuous load feature like twitter that kept populating as you scroll down with one finger. Turn on the VoiceOver and try the 3 finger equivalent of a one finger swipe to do a standard scroll and nothing happens to populate the page. The blind user has hit a brick wall. I think we have to ensure this type of thing doesn't happen on WCAG conforming things.

2.5.4 Modified Touch (5th draft): All functions available by touch (or button presses) are still available by touch (or button presses) after system assistive technology is turned on.Expand to view comments

no comments:

2.5.5 Touch Target Clearance: The center of each touch target has a distance of at least 9 mm from the center of any other touch target, except when the user has reduced the default scale of content. (Level AA)

Expand to view comments

David: Isn't this the same as 2.5.2 above (9 mm distance)

Gregg: This is essentiall 9x9 target center to center. The same problems as above. 9mm on what mobile device?

2.5.6 No Swipe Trap: When touch input behavior is modified by built-in assistive technology so that touch focus can be moved to a component of the page using swipe gestures, then focus can be moved away from that component using swipe gestures or the user is advised of the method for moving focus away. (Level A)Expand to view comments

Gregg: Advised in an accessible way to all users?

2.5.7 Pinch Zoom: Browser pinch zoom is not blocked by the page's viewport meta element so that it can be used to zoom the page to 200%. Restrictive values for user-scalable and maximum-scale attributes of this meta element should be avoided.Expand to view comments

David: Have to fix "should be avoided" or send to advisory

Gregg Comment: Maybe better as a failure of 1.4.4. FAILURE Blocking the zoom feature (pinch zoom or other) without providing some other method for achieving 200% magnification or betterPatrick: Just wondering if the fact that most mobile browsers (Chrome, Firefox, IE, Edge) provide settings to override/force zooming even when a page has disabled it makes any difference here? iOS/Safari is the only mainstream mobile browser which currently does not provide such a setting, granted. But what if that too did?

2.5.8 Device manipulation: When device manipulation gestures are provided, touch and keyboard operable alternative control options are available.Expand to view comments

Gregg: How is this different than “all must be keyboard operable” This says if it is gesture – then it must be gesture and keyboard. So that looks the same as it must be keyboard.

David: It adds "Touch".

New Possible Guideline Changing Screen Orientation (Portrait/Landscape)

3.4 Flexible Orientation: Ensure users can use the content in the orientation that suits their circumstancesExpand to view comments

Gregg: Ensure is a requirement. Is this always possible?

Possible New Success Criteria

3.4.1 Expose Orientation: Changes in orientation are programmatically exposed to ensure detection by assistive technology such as screen readers.Expand to view comments

Gregg: This is not a web content issue but a mobile device issue. Hmmm how about alert? Again – if it can’t always be possible – it shouldn't be an SC. Maybe it is always possible? ???? Home screens?

Patrick: Agree with Gregg this is not a web content issue as currently stated. Also, not every orientation change needs something like an alert to the user...what if nothing actually changes on the page when switching between portrait and landscape - does an AT user need to know that they just rotated the device? Perhaps the intent here is to ensure web content notifies the user if an orientation change had some effect, like a complete change in layout (for instance, a tab navigation in landscape turning into an accordion in portrait; a navigation bar in landscape turning into a button+dropdown in portrait)? If so, this needs rewording, along similar lines to a change in context?

Jon: Yes, that is the intention. For example, if you change from landscape to portrait a set of links disappears and now there is a button menu instead. Or controls disappear or appear depending on the orientation.

New Possible techniques for Success Criteria 3.2.3

If the navigation bar is collapsed into a single icon, the entries in the drop-down list that appear when activating the icon are still in the same relative order as the full navigation menu.Expand to view comments

Gregg: Good to focus this as technique for WCAG.

A Web site, when viewed on the different screen sizes and in different orientations, has some components that are hidden or appear in a different order. The components that show, however, remain consistent for any screen size and orientation.New Techniques for 3.3.2 Labels or Instructions

Therefore, instructions (e.g. overlays, tooltips, tutorials, etc.) should be provided to explain what gestures can be used to control a given interface and whether there are alternatives.Expand to view comments

Gregg: Good – advisory techniques.

Advisory Technique for Grouping operable elements that perform the same action (4.4 in mobile doc)

When multiple elements perform the same action or go to the same destination (e.g. link icon with link text), these should be contained within the same actionable element. This increases the touch target size for all users and benefits people with dexterity impairments. It also reduces the number of redundant focus targets, which benefits people using screen readers and keyboard/switch control.Expand to view comments

Gregg: Good technique for WCAG Oh this is the same as H2 no? are you just suggesting adding this text to H2. Good idea.

4.5 Provide clear indication that elements are actionable

New Guideline

1.6 Make interactive elements distinguishableNew Success Criteria

1.6.1 Triggers Distinguishable: Elements that trigger changes should be sufficiently distinct to be clearly distinguishable from non-actionable elements (content, status information, etc).Expand to view comments

Gregg: Just as true for non-mobile BUT - not testable. What does “sufficiently distinct” mean. Or “Clearly distinguishable” WCAG requires that they be programmatically determined – so users could use AT to make the very visible (much more so than designers would ever permit) But I’m not sure how you can create something testable out of this Make it an ADVISORY TECHNIQUE ???

New Sufficient Techniques for 1.6.1

Conventional Shape: Button shape (rounded corners, drop shadows), checkbox, select rectangle with arrow pointing downwardsIconography: conventional visual icons (question mark, home icon, burger icon for menu, floppy disk for save, back arrow, etc)Color Offset: shape with different background color to distinguish the element from the page background, different text colorConventional Style: Underlined text for links, color for linksConventional positioning: Commonly used position such as a top left position for back button (iOS), position of menu items within left-aligned lists in drop-down menus for navigationExpand to view comments

Gregg: Not sure how these are sufficient by themselves to meet the above. This has to do with making things findable or understandable – not distinguishable.

Set the virtual keyboard to the type of data entry required 5.1

New technique under 1.3.1 Info and Relationships

Data Mask: Set the virtual keyboard to the type of data entry requiredGregg: Good advisory technique.

New Success Criteria under 4.1

4.1.4 Non-interference of AT: Content does not interfere with default functionality of platform level assistive technologyExpand to view comments

Gregg: How would content know what this was? For example – if a page provided self voicing this might interfere with screen reader on platform. So no page can ever self voice?

Advisory techniques: Small Screen Size

Advisory techniques:

Consider mobile when initially designing the layout and relevancy of content.

(Rational for not being a sufficient technique yet: the word "minimizing" is not testable, can we quantify it somehow?)Where necessary, adapt the information provided on mobile compared to desktop/laptop versions with a dedicated mobile version or a responsive design

- a dedicated mobile version contains content tailored for mobile use. For example, the content may contain fewer content modules, fewer images, or focus on important mobile usage scenarios.

- a responsive design contains content that stays the same, but CSS stylesheets are used to render it differently depending on the viewport width. For example, on narrow screens the navigation menus may be hidden until the user taps a menu button

(Rational for not being a sufficient technique yet: the word "necessary" is not testable, can we quantify it somehow?)Minimizing the amount of information that is put on each page compared to desktop/laptop versions by providing a dedicated mobile version or a responsive design:

(Rational for not being a sufficient technique yet: the words "minimizing" and "important" are not testable can we quantify it somehow?)Providing a reasonable default size for content and touch controls. See B.2 Touch Target Size and Spacing to minimize the need to zoom in and out for users with low vision.

(Rational for not being a sufficient technique yet: it may not apply in ALL circumstances)Adapting the length of link text to the viewport width.

(Rational for not being a sufficient technique yet: Can we provide specific link lengths to specific viewport sizes, probably not?)Positioning form fields below, rather than beside, their labels (in portrait layout)Advisory techniques: 2.2 Zoom/Magnification

Support for system fonts that follow platform level user preferences for text size.

(Rational for not being sufficient technique: can this be done?)

Gregg Comment:This looks like a technique for 1.4.4.---- but you should say “to at least 200%” or else it could not be sufficient

Provide on-page controls to change the text size.

(Rational for not being sufficient technique: best practice but usually not big enough, redundant with other zooming, extra work)Advisory techniques: Contrast (2.3)

The default point size for mobile platforms might be larger than the default point size used on non-mobile devices. When determining which contrast ratio to follow, developers should strive to make sure to apply the lessened contrast ratio only when text is roughly equivalent to 1.2 times bold or 1.5 times (120% bold or 150%) that of the default platform size.

(Rational for not being SC: "roughly equivalent" is not testable. Can we settle on something determinable and testable?

Gregg Comment: How does an author know that someone will be viewing their content on a mobile device? Or what size mobile device? A table vs an iphone 4 I mega different. Not sure how you can make an SC out of this.Advisory Techniques for 3.2 Touch Target Size and Spacing

Ensuring that touch targets close to the minimum size are surrounded by a small amount of inactive space.

Rational for not being a Success Criteria: Cannot measure "Small amount". Can we quantify it?Expand to view comments

Gregg: What is the evidence that this is of value? Not true of many keyboards. Are they all unusable? Also if you define a gap – see notes above on ‘what size screen for that gap?”

Advisory Techniques for touchscreen gestures

Gestures in apps should be as easy as possible to carry out.

Rational for not being a Success Criteria: Cannot measure "easy as possible". Can we do rework it?Some (but not all) mobile operating systems provide work-around features that let the user simulate complex gestures with simpler ones using an onscreen menu.

Expand to view comments

David: Rational for not being a Success Criteria: Cannot measure this or apply it in all circumstances. Can we do rework it?

Gregg: It SHOULD be required. But it is already covered by “all functions from keyboard interface” since that would provide an alternate method. So there is already an alternate way to do this. NOTE: again – for some devices –it may not be possible to have something be accessible. A broach that you tap on – and ask questions and it answers in audio – would not be usable by someone who is deaf. They fact that you can’t make it usable – would not be a reason to rewrite the accessibility rules to make it possible for it to pass. It simply would always be inaccessible. Accessibility rules do not say that everything must be accessible to all. They say that if it is reasonable or not an undue burden or some such – then it needs to do x or y or z. Some things are not required to be accessible to some groups. That does not make them accessible – it only means they are not required to be accessible. RE keyboard interface – there may be some IOT devices that do not have remote interfaces – and the iot device itself is too small or limited to be accessible. We don’t rewrite the rules to make it possible for it to pass. We simply say that it is not accessible and it is not possible or reasonable to do so. Most IOT does have a remote interface –so that can be accessible.

Usually, design alternatives exist to allow changes to settings via simple tap or swipe gestures.

Rational for not being a Success Criteria: Cannot measure this or apply it in all circumstances. Can we do rework it?Advisiory technique for Device manipulation Gestures

Some (but not all) mobile operating systems provide work-around features that let the user simulate device shakes, tilts, etc. from an onscreen menu.

Rational for it not being a Success Criteria: It doesn't apply to all situations. Can we quantify it?Advisiory technique placing buttons where they are easy to access consistent layout

Developers should also consider that an easy-to-use button placement for some users might cause difficulties for others (e.g. left- vs. right-handed use, assumptions about thumb range of motion). Therefore, flexible use should always be the goal.

Rational for it not being a Success Criteria: It doesn't apply to all situations. Can we quantify it?Expand to view comments

Gregg Comment: Quantifying it would be required but since it doesn't apply to many pages which have interactive content all over the page – quantification is not relevant.

Advisory technique for Positioning important page elements before the page scroll 4.3

Positioning important page information so it is visible without requiring scrolling can assist users with low vision and users with cognitive impairments.

Rational for it not being a Success Criteria: It doesn't apply to all situations. Can we quantify it?Expand to view comments

Gregg: Agree so advisory technique for WCAG?

Advisory technique Provide easy methods for data entry 5.2

Reduce the amount of text entry needed by providing select menus, radio buttons, check boxes or by automatically entering known information (e.g. date, time, location).

Rational for it not being a Success Criteria: It doesn't apply to all situations. Can we quantify it?Expand to view comments

Gregg: Can’t be SC because it is prescriptive and lists specific solutions – when others may also apply and be better.

Other ideas to consider

- Moving transitions triggers some to get vertigo http://www.alphr.com/apple/1001057/why-apple-s-next-operating-systems-are-already-making-users-sick (via David)

- iOS (or Macbook) should have separate sound outputs. So the screen reader user can play a movie connecting to a TV for friends and listen to VO to operate the movie, but not subject others to VoiceOver. This is an operating system and hardware issue that authors can't do. (Via Janina Sajka)

- Can we add touch and hold duration to our touch section? I have an aunt who has lost feeling in her fingers. She mentioned the issue with touching too long on the buttons on her iPhone it causes a long press action. Android allows for changing the touch and release duration but I do not see a similar setting on iphone/iPad. (Alan Smith on list)

- Duration of touch needs to be distinguishable for those who don't know how long they pushed

- Force for those who cannot push at different strengths or who do not know how hard they pushed

Understanding Mobile Document

Introduction

This document provides informative guidance (but does not set requirements) with regard to interpreting and applying Web Content Accessibility Guidelines (WCAG) 2.0 [WCAG20] to web and non-web mobile content and applications.

While the World Wide Web Consortium (W3C)'s W3C Web Accessibility Initiative (WAI) is primarily concerned with web technologies, its guidance is also relevant to non-web technologies. The W3C-WAI has published the Note Guidance on Applying WCAG 2.0 to Non-Web Information and Communications Technologies (WCAG2ICT) to provide authoritative guidance on how to apply WCAG to non-web technologies such as mobile native applications. The current document is a mobile-specific extension of this effort.

W3C Mobile Web Initiative Recommendations and Notes pertaining to mobile technologies also include the Mobile Web Best Practices and the Mobile Web Application Best Practices. These offer general guidance to developers on how to create content and applications that work well on mobile devices. The current document is focused on the accessibility of mobile web and applications to people with disabilities and is not intended to supplant any other W3C work.

WCAG 2.0 and Mobile Content/Applications

"Mobile" is a generic term for a broad range of wireless devices and applications that are easy to carry and use in a wide variety of settings, including outdoors. Mobile devices range from small handheld devices (e.g. feature phones, smartphones) to somewhat larger tablet devices. The term also applies to "wearables" such as "smart"-glasses, "smart"-watches and fitness bands, and is relevant to other small computing devices such as those embedded into car dashboards, airplane seatbacks, and household appliances.

While mobile is viewed by some as separate from "desktop/laptop", and thus perhaps requiring new and different accessibility guidance, in reality there is no absolute divide between the categories. For example:

- many desktop/laptop devices now include touchscreen gesture control,

- many mobile devices can be connected to an external keyboard and mouse,

- web pages utilizing responsive design can transition into various screen sizes even on a desktop/laptop when the browser viewport is resized or zoomed in, and

- mobile operating systems have been used for laptop devices.

Furthermore, the vast majority of user interface patterns from desktop/laptop systems (e.g. text, hyperlinks, tables, buttons, pop-up menus, etc.) are equally applicable to mobile. Therefore, it's not surprising that a large number of existing WCAG 2.0 techniques can be applied to mobile content and applications (see Appendix A). Overall, WCAG 2.0 is highly relevant to both web and non-web mobile content and applications.

That said, mobile devices do present a mix of accessibility issues that are different from the typical desktop/laptop. The "Discussion of Mobile-Related Issues" section that follows, explains how these issues can be addressed in the context of WCAG 2.0 as it exists or with additional best practices. All of the advice in this document can be applied to mobile web sites, mobile web applications, and hybrid web-native applications. Most of the advice also applies to native applications (also known as "mobile apps").

Note: WCAG 2.0 does not provide testable success criteria for some of the mobile-related issues. The work of the Mobile Accessibility Task Force has been to develop techniques and best practices in these areas. When the techniques or best practices don't map to specific WCAG success criteria, they aren't given a sufficient, advisory or failure designation. This doesn't mean that they are optional for creating accessible web content on a mobile platform, but rather that they cannot currently be assigned a designation. The Task Force anticipates that some of these techniques will be included as sufficient or advisory in a potential future iteration of WCAG.

The current document references existing WCAG 2.0 Techniques that apply to mobile platform (see Appendix A) and provides new best practices, which may in the future become WCAG 2.0 Techniques that directly address emerging mobile accessibility challenges such as small screens, touch and gesture interface, and changing screen orientation.

Other W3C-WAI Guidelines Related to Mobile

UAAG 2.0 and Accessible Mobile Browsers

The User Agent Accessibility Guidelines (UAAG) 2.0 [UAAG2] is meant for the developers of user agents (e.g. web browsers and media players), whether for desktop/laptop or mobile operating systems. A user agent that follows UAAG 2.0 will improve accessibility through its own user interface, through options it provides for rendering and interacting with content, and through its ability to communicate with other technologies, including assistive technologies.

To assist developers of mobile browsers, the UAAG 2.0 Reference support document contains numerous mobile examples. These examples are also available in a separate list of mobile-related examples, maintained by the User Agent Accessibility Guidelines Working Group (UAWG).

ATAG 2.0 and Accessible Mobile Authoring Tools

The Authoring Tool Accessibility Guidelines (ATAG) 2.0 [ATAG2] provides guidelines for the developers of authoring tools, whether for desktop/laptop or mobile operating systems. An authoring tool that follows ATAG 2.0 will be both more accessible to authors with disabilities (Part A) and designed to enable, support, and promote the production of more accessible web content by all authors (Part B).

To assist developers of mobile authoring tools, the Implementing ATAG 2.0 support document contains numerous mobile authoring tool examples.

Discussion of Mobile-Related Issues

1. Mobile accessibility considerations primarily related to Principle 1: Perceivable

1.1 Small Screen Size

One of the most common characteristics of mobile devices is the small size of their screens. This limited size places practical constraints on the amount of information that can be effectively perceived by users at any one time, even when high screen resolution might enable large amounts of information to be rendered. The amount of information that can be displayed is even further limited when magnification is used, for example by people with low vision. See 2.2 Zoom/Magnification.

Some best practices for helping users to make the most of small screens include

- Consider mobile when initially designing the layout and relevancy of content.

- Where necessary, adapt the information provided on mobile compared to desktop/laptop versions with a dedicated mobile version or a responsive design

- a dedicated mobile version contains content tailored for mobile use. For example, the content may contain fewer content modules, fewer images, or focus on important mobile usage scenarios.

- a responsive design contains content that stays the same, but CSS stylesheets are used to render it differently depending on the viewport width. For example, on narrow screens the navigation menus may be hidden until the user taps a menu button.

- Minimizing the amount of information that is put on each page compared to desktop/laptop versions by providing a dedicated mobile version or a responsive design:

- Providing a reasonable default size for content and touch controls. See B.2 Touch Target Size and Spacing to minimize the need to zoom in and out for users with low vision.

- Adapting the length of link text to the viewport width.

- Positioning form fields below, rather than beside, their labels (in portrait layout)

1.2 Zoom/Magnification

A variety of methods allow users to control content size on mobile devices with small screens. Some of these features are targeted at all users (e.g. browser “pinch zoom” features), while others tend to be made available as "accessibility features" targeted at people with visual or cognitive disabilities.

Note on reflow: There are important accessibility differences between zoom/magnification features that horizontally reflow content, especially text, and those that do not. When text content is not reflowed, users must pan back and forth as they read each line.

Zoom/Magnification features include the following:

- OS-level features

- Set default text size (typically controlled from the display settings) Note: System text size is often not supported by mobile browsers.

- Magnify entire screen (typically controlled from the accessibility settings). Note: Using this setting requires the user to pan vertically and horizontally.

- Magnifying lens view under user's finger (typically controlled from the accessibility settings)

- Browser-level features

- Set default text size of text rendered in the browser's viewport

- Reader modes that render the main content without certain types of extraneous content and at a user-specified text size

- Magnify browser's viewport (typically "pinch-zoom"). Note: Using this setting typically requires the user to pan vertically and horizontally, although some browsers have features that re-flow the content at the new magnification level, overriding author attempts to prevent pinch-zoom).

The WCAG 2.0 success criterion that is most related to zoom/magnification is

- 1.4.4 Resize text (Level AA)

SC 1.4.4 requires text to be resizable without assistive technology up to at least 200 percent. To meet this requirement content must not prevent text magnification by the user.

Some methods for supporting magnification/zoom include:

- Use techniques that support text resizing without requiring horizontal panning. Relying on full viewport zooming (e.g. not blocking the browser's pinch zoom feature) requires the user to pan horizontally as well as vertically.

- Ensure that the browser pinch zoom is not blocked by the page's viewport meta element so that it can be used to zoom the page to at least 200%. Restrictive values for user-scalable and maximum-scale attributes of this meta element should be avoided. While this technique meets the success criteria it is less usable than supporting text resizing features that reflow content to the user's chosen viewport size.

- Support for OS text size settings. For web content this will depend on browser support.

- Provide on-page controls to change the text size.

Accessibility features geared toward specific populations of people with disabilities fall under the definition of assistive technology adopted by WCAG and thus cannot be relied upon to meet success criterion 1.4.4. For example, an OS-level zoom feature that magnifies all platform content and has features to specifically support people with low vision is likely considered an assistive technology.

1.3 Contrast

Mobile devices are more likely than desktop/laptop devices to be used in varied environments including outdoors, where glare from the sun or other strong lighting sources is more likely. This scenario heightens the importance of use of good contrast for all users and may compound the challenges that users with low vision have accessing content with poor contrast on mobile devices.

The WCAG 2.0 success criteria related to the issue of contrast are:

- 1.4.3 Contrast (Minimum) (Level AA) which requires a contrast of at least 4.5:1 (or 3:1 for large-scale text) and

- 1.4.6 Contrast (Enhanced) (Level AAA) which requires a contrast of at least 7:1 (or 4.5:1 for large-scale text).

SC 1.4.3. allows for different contrast ratios for large text. Allowing different contrast ratios for larger text is useful because larger text with wider character strokes is easier to read at a lower contrast. This allows designers more leeway for contrast of larger text, which is helpful for content such as titles. The ratio of 18-point text or 14-point bold text described in the SC 1.4.3 was judged to be large enough to enable a lower contrast ratio for web pages displayed on a 15-inch monitor at 1024x768 resolution with a 24-inch viewing distance. Mobile device content is viewed on smaller screens and in different conditions so this allowance for lessened contrast on large text must be considered carefully for mobile apps.

For instance, the default text size for mobile platforms might be larger than the default text size used on non-mobile devices. When determining which contrast ratio to follow, developers should strive to make sure to apply the lessened contrast ratio only when text is roughly equivalent to 1.2 times bold or 1.5 times (120% bold or 150%) that of the default platform size. Note, however, that the use of text that is 1.5 times the default on mobile platforms does not imply that the text will be readable by a person with low vision. People with low vision will likely need and use additional platform level accessibility features and assistive technology such as increased text size and zoom features to access mobile content.

1.4 Non-Linear Screen Layouts

With limited screen “real estate” but a variety of gesture options available, mobile developers have experimented with a variety of screen layouts beyond the conventional web paradigm in which the user begins at the “top” and generally works down. Some mobile layouts start the user somewhere in the “middle” and provide highly dynamic visual experiences in which new content may be pulled in from any direction or the user’s point of regard may shift in various directions as previously off-screen content is brought on-screen.

Such user interfaces can be disorienting when the only indicators of the state of the user interface and what is happening in response to user actions are visual.

The WCAG 2.0 success criterion related to the issue of non-linear layouts is:

- 1.3.1 Info and Relationships (Level A)

- 1.3.2 Meaningful Sequence (Level A)

2. Mobile accessibility considerations primarily related to Principle 2: Operable

2.1 Keyboard Control for Touchscreen Devices

Mobile device design has evolved away from built-in physical keyboards (e.g. fixed, slide-out) towards devices that maximize touchscreen area and display an on-screen keyboard only when the user has selected a user interface control that accepts text input (e.g. a textbox).

However, keyboard accessibility remains as important as ever. WCAG 2.0 requires keyboard control at Level A and keyboard control is supported by most major mobile operating systems via keyboard interfaces, which allow mobile devices to be operated using external physical keyboards (e.g. keyboards connected via Bluetooth, USB On-The-Go) or alternative on-screen keyboards (e.g. scanning on-screen keyboards).

Supporting these keyboard interfaces benefits several groups with disabilities:

- People with visual disabilities who can benefit from some characteristics of physical keyboards over touchscreen keyboards (e.g. clearly separated keys, key nibs and more predictable key layouts).

- People with dexterity or mobility disabilities, who can benefit from keyboards optimized to minimize inadvertent presses (e.g. differently shaped, spaced and guarded keys) or from specialized input methods that emulate keyboard input.

- People who can be confused by the dynamic nature of onscreen keyboards and who can benefit from the consistency of a physical keyboard.

Several WCAG 2.0 success criteria are relevant to effective keyboard control:

- 2.1.1 Keyboard (Level A)

- 2.1.2 No Keyboard Trap (Level A)

- 2.4.3 Focus Order (Level A)

- 2.4.7 Focus Visible (Level AA)

2.2 Touch Target Size and Spacing

The high screen resolution of mobile devices means that many interactive elements can be shown together on a small screen. But these elements must be big enough and have enough distance from each other so that users can safely target them by touch.

Best practices for touch target size include the following:

- Ensuring that touch targets are at least 9 mm high by 9 mm wide, independent of the screen size, device or resolution.

- Ensuring that touch targets close to the minimum size are surrounded by a small amount of inactive space.

Note: Screen magnification should not need to be used to obtain this size, because magnifying the screen often introduces the need to pan horizontally as well as vertically, which can decrease usability.

2.3 Touchscreen Gestures

Many mobile devices are designed to be primarily operated via gestures made on a touchscreen. These gestures can be simple, such as a tap with one finger, or very complex, involving multiple fingers, multiple taps and drawn shapes.

Some (but not all) mobile operating systems provide work-around features that let the user simulate complex gestures with simpler ones using an onscreen menu.

Some best practices when deciding on touchscreen gestures include the following:

- Gestures in apps should be as easy as possible to carry out. This is especially important for screen reader interaction modes that replace direct touch manipulation by a two-step process of focusing and activating elements. It is also a challenge for users with motor or dexterity impairments or people who rely on head pointers or a stylus where multi-touch gestures may be difficult or impossible to perform. Often, interface designers have different options for how to implement an action. Widgets requiring complex gestures can be difficult or impossible to use for screen reader users. Usually, design alternatives exist to allow changes to settings via simple tap or swipe gestures.

- Activating elements via the click event. Using the mouseup or touchend event to trigger actions helps prevent unintentional actions during touch and mouse interaction. Mouse users clicking on actionable elements (links, buttons, submit inputs) should have the opportunity to move the cursor outside the element to prevent the event from being triggered. This allows users to change their minds without being forced to commit to an action. In the same way, elements accessed via touch interaction should generally trigger an event (e.g. navigation, submits) only when the touchend event is fired (i.e. when all of the following are true: the user has lifted the finger off the screen, the last position of the finger is inside the actionable element, and the last position of the finger equals the position at touchstart).

Technique M003 - Activating elements via the click eventAnother issue with touchscreen gestures is that they might lack onscreen indicators that remind people how and when to use them. For example, a swipe in from the left side of the screen gesture to open a menu is not discoverable without an indicator or advisement of the gesture. See 4.6 Provide instructions for custom touchscreen and device manipulation gestures.

Note: While improving the accessibility of touchscreen gestures is important, keyboard accessibility is still required by some users and to meet WCAG 2.0. See 3.1 Keyboard Control for Touchscreen Devices.

2.4 Device Manipulation Gestures

In addition to touchscreen gestures, many mobile operating systems provide developers with control options that are triggered by physically manipulating the device (e.g. shaking or tilting). While device manipulation gestures can help developers create innovative user interfaces, they can also be a challenge for people who have difficulty holding or are unable to hold a mobile device.

Some (but not all) mobile operating systems provide work-around features that let the user simulate device shakes, tilts, etc. from an onscreen menu.

Therefore, even when device manipulation gestures are provided, developers should still provide touch and keyboard operable alternative control options. See 3.1 Keyboard Control for Touchscreen Devices.

- 2.1.1 Keyboard (Level A)

Another issue with control via device manipulation gestures is that they might lack onscreen indicators that remind people how and when to use them. See Touchscreen gesture instructions. See 4.6 Provide instructions for custom touchscreen and device manipulation gestures.

2.5 Placing buttons where they are easy to access

Mobile sites and applications should position interactive elements where they can be easily reached when the device is held in different positions.

When designing mobile web content and applications many developers attempt to optimize use with one hand. This can benefit people with disabilities who may only have one hand available, however, developers should also consider that an easy-to-use button placement for some users might cause difficulties for others (e.g. left- vs. right-handed use, assumptions about thumb range of motion). Therefore, flexible use should always be the goal.

Some (but not all) mobile operating systems provide work-around features that let the user temporarily shift the display downwards or sideways to facilitate one-handed operation.

3. Mobile accessibility considerations related primarily to Principle 3: Understandable

3.1 Changing Screen Orientation (Portrait/Landscape)

Some mobile applications automatically set the screen to a particular display orientation (landscape or portrait) and expect that users will respond by rotating the mobile device to match. However, some users have their mobile devices mounted in a fixed orientation (e.g. on the arm of a power wheelchair).

Therefore, mobile application developers should try to support both orientations. If it is not possible to support both orientations, developers should ensure that it is easy for all users to change the orientation to return to a point at which their device orientation is supported.

Changes in orientation must be programmatically exposed to ensure detection by assistive technology such as screen readers. For example, if a screen reader user is unaware that the orientation has changed the user might perform incorrect navigation commands.

3.2 Consistent Layout

Components that are repeated across multiple pages should be presented in a consistent layout. In responsive web design, where components are arranged based on device size and screen orientation, web pages within a particular view (set size and orientation) should be consistent in placement of repeated components and navigational components. Consistency between the different screen sizes and screen orientations is not a requirement under WCAG 2.0.

For example:

- A Web site has a logo, a title, a search form and a navigation bar at the top of each page; these appear in the same relative order on each page where they are repeated. On one page the search form is missing but the other items are still in the same order. When the Web site is viewed on a small screen in portrait mode, the navigation bar is collapsed into a single icon but entries in the drop-down list that appears when activating the icon are still in the same relative order.

- A Web site, when viewed on the different screen sizes and in different orientations, has some components that are hidden or appear in a different order. The components that show, however, remain consistent for any screen size and orientation.

The WCAG 2.0 success criteria that are most related to the issue of consistency are:

- 3.2.3 Consistent Navigation (Level AA)

- 3.2.4 Consistent Identification (Level AA)

3.3 Positioning important page elements before the page scroll

The small screen size on many mobile devices limits the amount of content that can be displayed without scrolling.

Positioning important page information so it is visible without requiring scrolling can assist users with low vision and users with cognitive impairments.

If a user with low vision has the screen magnified only a small portion of the page might be viewable at a given time. Placing important elements before the page scroll allows those who use screen magnifiers to locate important information without having to scroll the view to move the magnified area. Placing important elements before the page scroll also makes it possible to locate content without performing an interaction. This assists users that have cognitive impairments such as short-term memory disabilities. Placing important elements before the page scroll also helps ensure that elements are placed in a consistent location. Consistent and predictable location of elements assists people with cognitive impairments and low vision.

3.4 Grouping operable elements that perform the same action

When multiple elements perform the same action or go to the same destination (e.g. link icon with link text), these should be contained within the same actionable element. This increases the touch target size for all users and benefits people with dexterity impairments. It also reduces the number of redundant focus targets, which benefits people using screen readers and keyboard/switch control.

The WCAG 2.0 success criterion that is most related to grouping of actionable elements is:

- 2.4.4 Link Purpose (In Context) (Level A)

- 2.4.9 Link Purpose (Link Only) (Level AA)

For more information on grouping operable elements, see H2: Combining adjacent image and text links for the same resource technique.

3.5 Provide clear indication that elements are actionable

Elements that trigger changes should be sufficiently distinct to be clearly distinguishable from non-actionable elements (content, status information, etc.). Providing a clear indication that elements are actionable is relevant for web and native mobile applications that have actionable elements like buttons or links, especially in interaction modes where actionable elements are commonly detected visually (touch and mouse use). Interactive elements must also be detectable by users who rely on a programmatically determined accessible name (e.g. screen reader users).

Visual users who interact with content using touch or visual cursors (e.g. mice, touchpads, joysticks) should be able to clearly distinguish actionable elements such as links or buttons. Existing interface design conventions are aimed at indicating that these visual elements are actionable. The principle of redundant coding ensures that elements are indicated as actionable by more than one distinguishing visual feature. Following these conventions benefits all users, but especially users with vision impairments.

Visual features that can set an actionable element apart include shape, color, style, positioning, text label for an action, and conventional iconography.

Examples of distinguishing features:

- Conventional shape: Button shape (rounded corners, drop shadows), checkbox, select rectangle with arrow pointing downwards

- Iconography: conventional visual icons (question mark, home icon, burger icon for menu, floppy disk for save, back arrow, etc.)

- Color offset: shape with different background color to distinguish the element from the page background, different text color

- Conventional style: Underlined text for links, color for links

- Conventional positioning: Commonly used position such as a top left position for back button (iOS), position of menu items within left-aligned lists in drop-down menus for navigation

The WCAG 2.0 success criteria do not directly address issue of clear visual indication that elements are actionable but are related to the following success criteria:

- 3.2.3 Consistent Navigation (Level AA)

- 3.2.4 Consistent Identification (Level AA)

3.6 Provide instructions for custom touchscreen and device manipulation gestures

The ability to provide control via custom touchscreen and device manipulation gestures can help developers create efficient new interfaces. However, for many people, custom gestures can be a challenge to discover, perform and remember.

Therefore, instructions (e.g. overlays, tooltips, tutorials, etc.) should be provided to explain what gestures can be used to control a given interface and whether there are alternatives. To be effective, the instructions should, themselves, be easily discoverable and accessible. The instructions should also be available anytime the user needs them, not just on first use, though on first use they may be made more apparent through highlighting or some other mechanism.

These WCAG 2.0 success criteria are relevant to providing instructions for gestures:

- 3.3.2 Labels or Instructions (Level A)

- 3.3.5 Help (Level AAA)

4. Mobile accessibility considerations related primarily to Principle 4: Robust

4.1 Set the virtual keyboard to the type of data entry required

On some mobile devices, the standard keyboard can be customized in the device settings and additional custom keyboards can be installed. Some mobile devices also provide different virtual keyboards depending on the type of data entry. This can be set by the user or can be set to a specific keyboard. For example, using the different HTML5 form field controls (see Method Editor API) on a website will show different keyboards automatically when users are entering in information into that field. Setting the type of keyboard helps prevent errors and ensures formats are correct but can be confusing for people who are using a screen reader when there are subtle changes in the keyboard.

4.2 Provide easy methods for data entry

Users can enter information on mobile devices in multiple ways such as on-screen keyboard, Bluetooth keyboard, touch, and speech. Text entry can be time-consuming and difficult in certain circumstances. Reduce the amount of text entry needed by providing select menus, radio buttons, check boxes or by automatically entering known information (e.g. date, time, location).

4.3 Support the characteristic properties of the platform

Mobile devices provide many features to help users with disabilities interact with content. These include platform characteristics such as zoom, larger fonts, and captions. The features and functions available differ depending on the device and operating system version. For example, most platforms have the ability to set large fonts, but not all applications honor it for all text. Also, some applications might increase font size but not wrap text, causing horizontal scrolling.

A. WCAG Techniques that apply to mobile

WCAG 2.0 Techniques that Apply to Mobile

B. UAAG 2.0 Success Criteria that apply to mobile

UAAG Mobile Accessibility Examples

C. Acknowledgments

- Members of the Mobile Accessibility Task Force:

- Kathleen Anderson

- Jonathan Avila

- Tom Babinszki

- Matthew Brough

- Michael Cooper (WCAG WG Staff Contact)

- Gavin Evans

- Detlev Fischer

- Alistair Garrison

- Marc Johlic

- David MacDonald

- Kim Patch (TF Facilitator - UAAG WG)

- Jan Richards

- Mike Shebanek

- Brent Shiver

- Alan Smith

- Jeanne Spellman (UAAG WG Staff Contact)

- Henny Swan

- Peter Thiessen

- Kathleen Wahlbin (TF Facilitator - WCAG WG)

- Chairs of the WCAG WG and the UAAG WG:

- Jim Allan (UAAG WG), Texas School for the Blind and Visually Impaired

- Andrew Kirkpatrick (WCAG WG), Adobe Systems

- Joshue O'Connor (WCAG WG), NCBI Centre of Inclusive Technology

D. References

D.1 Informative references

- [UAAG20]

- James Allan; Kelly Ford; Kimberly Patch; Jeanne F Spellman. User Agent Accessibility Guidelines (UAAG) 2.0. 25 September 2014. W3C Working Draft. URL: http://www.w3.org/TR/UAAG20/

- [WCAG20]

- Ben Caldwell; Michael Cooper; Loretta Guarino Reid; Gregg Vanderheiden et al. Web Content Accessibility Guidelines (WCAG) 2.0. 11 December 2008. W3C Recommendation. URL: http://www.w3.org/TR/WCAG20/

However, the accessibility gap is that deveopers don't ensure that someone running assistive technology can ALSO operate the system with touch. This is 2.5.4 below.